Thu, Oct 9, 2025

Volume 6, Issue 4 (Winter 2017)

PTJ 2017, 6(4): 217-226 |

Back to browse issues page

Download citation:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

Azizabadi Farahani P, Mokhtarinia H, Osqueizadeh R. Design of an Ergonomics Assessment Tool for Playroom of Preschool Children. PTJ 2017; 6 (4) :217-226

URL: http://ptj.uswr.ac.ir/article-1-257-en.html

URL: http://ptj.uswr.ac.ir/article-1-257-en.html

1- Department of Ergonomics, University of Social Welfare and Rehabilitation Sciences, Tehran, Iran.

Full-Text [PDF 484 kb]

(2679 Downloads)

| Abstract (HTML) (6244 Views)

Full-Text: (3208 Views)

1. Introduction

Children are the valuable social capital and build the society future. Education in childhood, the time of the formation of personality and creation of different habits, establishes the future of the individual and the society. Changes in societies and ever-increasing employment of women increase the need for child daycare centers and the children spend a lot of day time in these centers. The first months and years of the child’s life play a great role in the formation and growth of his or her mental, physical, and intellectual dimensions. Hence, proper physical and emotional nutrition, enough sleep and sensory stimulation in the childhood are more important than ever. Studies have shown that the surrounding environment has a dramatic effect on our feelings, thinking, behaviors, and quality of life. The environmental impact can serve our needs or act against it [1]. These findings confirm the importance of designing space and facilities of childcare centers appropriate to the children’s characteristics and needs. Studies indicate that children recall their surroundings much better than people and things, therefore, paying attention to design details in their caring space is of special necessity [2].

Physiologists and educators believe that children learn mainly through play. Therefore, designing children’s educational environments should be suitable for their games [3]. Ergonomics is the knowledge which focuses on the study of human adaptation to the surrounding environment and tries to reduce the mismatch between the user and the environment during the designing process. In this process, the role of the ergonomist is understanding the needs and features of user and turning this knowledge into principles and rules for devising so that designers can follow these rules as design criteria [4]. In order to achieve the environmental design tailored to the needs and characteristics of children and also to verify compliance of the current design of the playrooms of preschool centers with user-centered design principles, a proper tool should be used. One of the challenges ahead in achieving an environment appropriate to the characteristics of children is the lack of a suitable tool that can be used to determine the defects in the present design. Therefore, the purpose of conducting the study is to determine the basic parameters of designing an environment for children based on ergonomic evaluation with collaborative approach. Finally this study leads to compilation and establishment of a set of considerations which help designers and supervisors of preschool centers improve spaces for children.

2. Materials and Methods

This study is an analytical descriptive study. After developing the evaluation tool, to verify its validity, the content validity and face validity were used in this study . Its reliability was investigated using test-retest method.

Development of assessment tool

In the first stage, the initial parameters of the tool were determined by two ways; A: Study of resources were the core part of this research. First, a general search in the literature was done to get acquainted with resources, conducted studies, and the pioneering theories concerning children’s environments which included searching the websites such as PubMed, Elsevier, Google Scholar, Scopus, Google, Springer, SAGE, Wiley with the keywords of “children environment”, “child care center environment”, “preschool environment” (as well as other synonyms concerning children’s environment such as interior design, environmental quality, play area safety, child care center setting, noise, children injuries, child-friendly, play type) and search for resources in libraries, including student theses and existing books, which were relatively related to the children’s environment.

The resources obtained from the search were categorized and the most relevant sources were selected. Through a brief overview of these resources, general dimensions of the search were identified. Then, new texts were found by searching in the specified fields using relevant keywords. The search method for resources at this stage was in a way that in addition to the resources found in every field, we accessed other resources by searching for keywords and references mentioned in each text. The search by this method continued in every field until we found no new information. Since during collecting related resources, we were faced with high volume of information, we needed a note-taking system. For this purpose, two files were allocated for documents: resource information file and content file. In the resource information file, such information as author’s name, year of publication, magazine or websites where the source was taken from, and its general theme; and in the content file, an important summary and the source’s findings were recorded. Then by carefully studying the content file, the parameters related to design, the arrangement and playground environment of the playroom in the kindergarten were extracted. At this stage we tried not to miss any relevant data.

B: “Visiting the place” also carried out to compare the parameters extracted from the sources with the current conditions in the playroom of preschool centers in Iran. Since nearly all the sources studied were related to other countries, it was necessary to visit preschool centers to adapt and localize these parameters and further the study with a better insight.

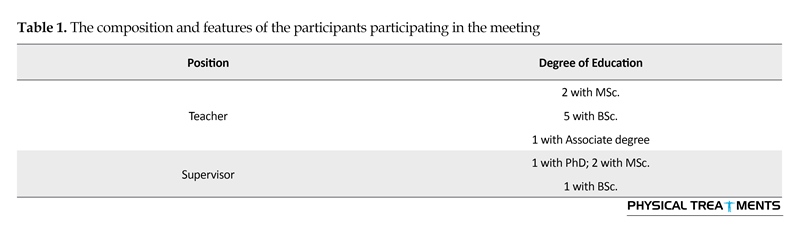

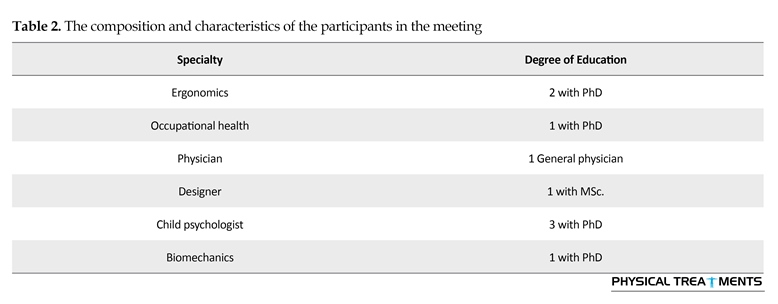

The second stage is the collaborative phase of the project. In this level, the extracted items were first investigated and challenged through a focused group method in two sessions with professionals and staff of preschool centers [5-7]. At the first meeting, 12 teachers and supervisors were present in preschool centers in Tehran (Table 1). A meeting was also attended by 9 specialists in the fields of child development, psychology, environmental ergonomics, and designing (Table 2). Because of the nature of this tool, in selecting subjects, we tried to invite experts from different disciplines related to the topic for the meetings to ensure the comprehensiveness of judgments. At these meetings, items were first written on the board and the attendees were asked to write down their opinions about the importance of each proposed item. Then each item was read and the attendees mentioned their opinions on the item raised. With the permission of the participants, the meeting’s discussions were recorded to be used for checking items and compilation of tool.

After the meetings, audio files were transcribed and examined with facilitator notes during the meeting. Then at a meeting and in the presence of members of the research team, information from these meetings were concluded and final items of the evaluation tool were identified. Also, items were divided into 5 categories. Eventually, the output of these meetings was a tool whose validity and reliability was evaluated in the next steps.

Validity assessment

Content validity specifies that if the content of the questionnaire fit and relevant to the purpose of the study. In other words, this feature is investigated by experts in order to ensure that the content of the questions reflects a complete range of studied features [8-10]. In order to determine the content validity of the tool in this research, qualitative and quantitative content validity methods were used. Holcny describes content validity as a technique used for deduction and proceeds purposefully and systematically to identify the specific characteristics of a message [11]. To determine the content validity, the proposed methods of Chadwick et al. and Lawshe were used [12, 13]. Chadwick et al. suggest that content validity method is applicable when an information exchange tool (which contains relatively clear and inferential messages) is going to be introduced and applied in a practical way. Lawshe also believed that when high levels of the abstract and insight is needed for judgment and in the case when the scope of inference in the content and around a message is extensive, researchers should use content validity approach.

Lawshe devised a model for determining content validity in a way that the questionnaire is provided to the panel group, the role of which is to guide panel members, making it possible for members to judge accurately based on the necessity of the tool components. In addition, they will be asked to comment on each item on the judgment criteria given. Member responses are coded as follows; E: Essential, U: Useful but not essential, and N: Not necessary. In this study, Lawshe model was adopted. Since different perceptions are possible out of the judgment criteria, we decided to rate the judgment criteria in this tool as “completely relevant”, “relevant”, “relatively relevant”, and “unrelated”.

The response sequence in this scale, which is modeled on the Likert-type scale, is more evident. At this stage the panel members should be identified. Usually, members of a panel of validity evaluators should be formed of professionals in the field of the tool to provide correct and accurate judgments. Although the proposed method of Lawshe states the minimum number of members as 4, but it was decided to use as many members as possible in this study. Due to problems such as a small number of specialists in the field of child ergonomics and also multi-disciplinary content of the study, it was decided that at least 8 and up to 16 subjects participated in the process of validity assessment. At least 8 subjects were chosen because it is twice as the recommended number of Lawshe in order to reach the needed agreement and a validity coefficient of more than 60% with a higher level of confidence.

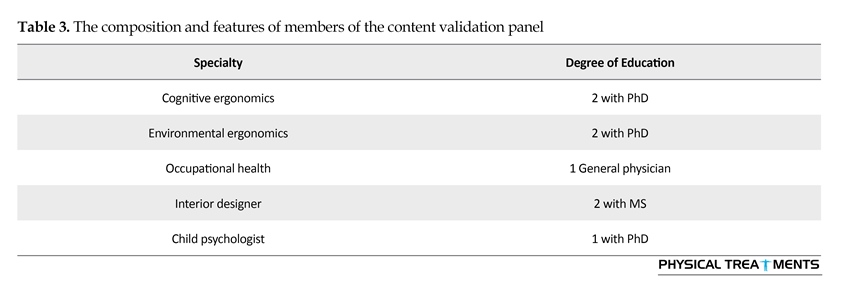

This is the amount accepted as the minimum coefficient of validity factor analysis by Chadwick et al. A maximum number of 16 subjects were selected because it is twice the minimum value and was considered to overcome problems such as failure to return the questionnaire. A total of 20 specialists were identified in the fields of ergonomics, psychology, educational sciences, and interior design. Then, 16 subjects agreed to participate in this study. Next, the proposed tool was sent by e-mail to them. Finally, 8 completed questionnaires were returned (Table 3) and delivered to researchers so the return rate was 50%. After carefully studying the tool, to evaluate the quality of the content, they were asked to provide their corrective views in detailed and written form. After collecting expert assessments and consulting with members of the research team, required changes were made to the tool.

In quantitative review of the content, we used the content validity ratio index to be ensured of selecting the most important and accurate content, and content validity index to be ensured that the tool items be well designed for content assessment. Panel member responses were quantified based on CVR equation.

CVR=(ne-n/2)/(n/2)

, where ne is the number of panel members who have identified that dimension or question as “necessary” (In this study, the sum of subjects which gave each item “completely relevant” and “relevant” scores), n is the total number of panel members, and CVR is the linear and direct conversion of panel members who have chosen the phrase “necessary”. The values assigned to CVR are as follows: 1) When less than half of the subjects choose “necessary” option, CVR is negative. 2) When half of the subjects choose “necessary” options and the other half choose other options, CVR is 0. 3) When all subjects choose the “necessary” option, CVR is 1. 4) When the number of subjects who choose the “necessary” option, include more than half but not all subjects, CVR is between 0% and 99%.

The impact score of items was also calculated. For this purpose, the defined 4-point criteria scales to evaluate content validity are weighted as follows:Unrelated: 0, relatively relevant: 1, relevant and completely relevant: 2 Then the impact scores are calculated using the formula:

Importance×Frequency (Percentage)=Impact score

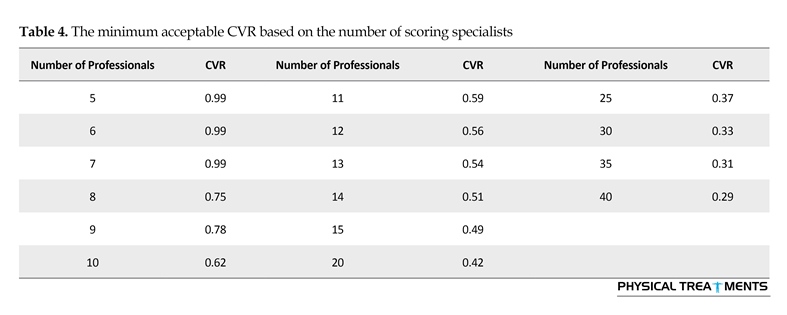

An item is accepted if the CVR value is between 0% and 75% and the mean number of judgments is 1.5 or higher (Table 4). This value of CVR shows that more than half of the panel members selected “completely relevant” or “relevant” options (necessary in Lawshe scale). The mean value equal or higher than 1.5 indicates that the average judgments are closer to “completely relevant” and “relevant” options. On the other hand, the mean value equal or higher than 1.5 shows that the average judgments are equal to 75% of the maximum average of 2, which is higher than the minimum accepted value of 60% determined for reliability of validity.

After calculating CVR, to determine the comprehensiveness of judgments about validity of the tool, CVI index was calculated. CVI is the average CVR values of remaining items in the validated tool. The higher the content validity of the tool, the more CVI index closer to 0.99 and vice versa. The CVI is calculated using the following formula:

CVI=(∑CVR)/(retained numbers)

After measuring the content validity of the tool and omitting the inappropriate items, its face validity was examined. A tool has a formal validity when its articles or questions are apparently similar to the topic, which are prepared to measure it [8-10]. Here we used the opinions of 15 inspectors from the Northwest Health Center in Tehran to qualitatively evaluate face validity. Face validity was evaluated under three criteria of “clear and transparent”, “simplicity”, and “layout and style” for each of which 4-point Likert-type scale (including: totally agree, agree, disagree, and totally disagree) was determined. The inspectors were also asked to note their comments about items that they have chosen “disagree” or “totally disagree”. Then, items were modified in terms of wording and use of words through collecting comments.

Reliability test

Reliability is one of the properties of measuring instruments and determines how much the measurement tool yields the same results under the same conditions [8-10]. To calculate the reliability of measurement tool in this research, we used the method of test-retest and the Intra-class Correlation Coefficient (ICC). The playrooms of 30 kindergartens in the 2nd, 5th, and 6th district of Tehran were investigated by one of the researchers (P. F.). After a week, once again, the playrooms of these centers were evaluated and the correlation coefficient between the results of two evaluations by the compiled tool was calculated.

3. Results

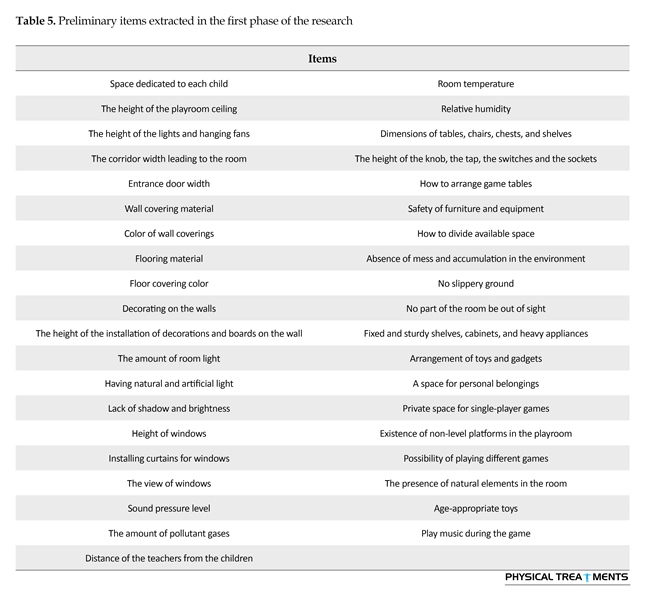

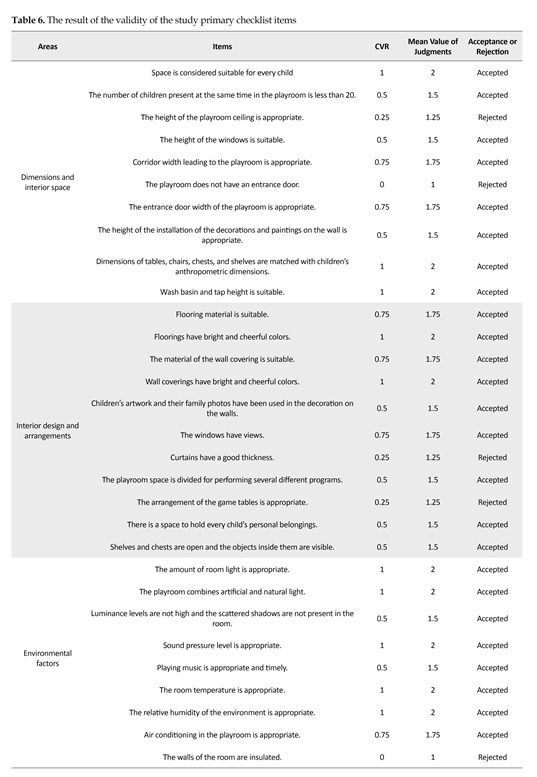

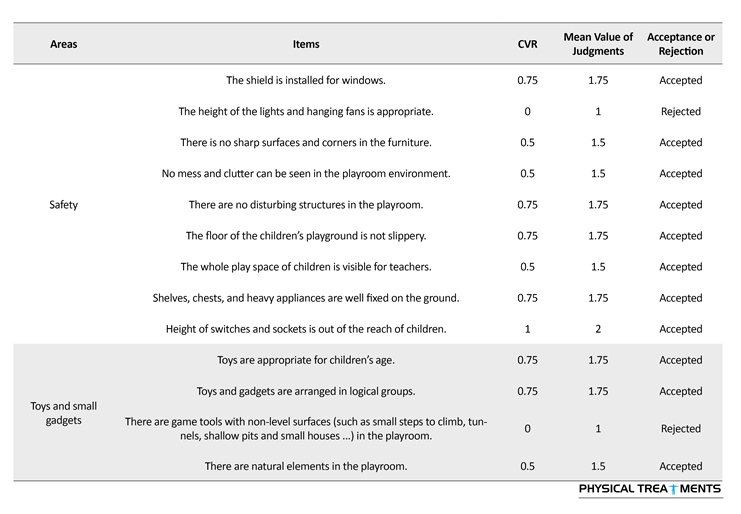

After reviewing the sources, a list of extracted items was prepared (Table 5). This list was evaluated during the focus group meetings and the meeting with the research team and then, the initial version of the evaluation tool was prepared with 43 items. Its content validity was evaluated, the results of which are presented in Table 6. In the recent study, after assessing validity, 7 questions were omitted. The value of its content validity index was obtained using the following equation:

CVI=26.25/36=72.9

Therefore, an ergonomic evaluation tool for kindergarten playroom was designed; CVI value of which was obtained as 72.9%, which is acceptable.

Also, after evaluating the face validity of the tool, two minor modifications were made on the items as follows: 1) The maximum number of children at a playroom at the same time was less than 20; and 2) There are natural elements (e.g., pot, soil, wood, aquarium, etc.) in the playroom. In order to estimate reliability, the test-retest method and the ICC index were used. The value of this index was 0.99.

4. Discussion

Preschool centers play an important role in the lives of children. Design of preschool center classrooms should be influenced by the children’s characteristics. To create an environment that entails growth and development of children’s skills, considering physiological characteristics, psychological and anthropometric aspects of children in the selection of features, design, and layout of their respective environments is of special significance.

Children are the valuable social capital and build the society future. Education in childhood, the time of the formation of personality and creation of different habits, establishes the future of the individual and the society. Changes in societies and ever-increasing employment of women increase the need for child daycare centers and the children spend a lot of day time in these centers. The first months and years of the child’s life play a great role in the formation and growth of his or her mental, physical, and intellectual dimensions. Hence, proper physical and emotional nutrition, enough sleep and sensory stimulation in the childhood are more important than ever. Studies have shown that the surrounding environment has a dramatic effect on our feelings, thinking, behaviors, and quality of life. The environmental impact can serve our needs or act against it [1]. These findings confirm the importance of designing space and facilities of childcare centers appropriate to the children’s characteristics and needs. Studies indicate that children recall their surroundings much better than people and things, therefore, paying attention to design details in their caring space is of special necessity [2].

Physiologists and educators believe that children learn mainly through play. Therefore, designing children’s educational environments should be suitable for their games [3]. Ergonomics is the knowledge which focuses on the study of human adaptation to the surrounding environment and tries to reduce the mismatch between the user and the environment during the designing process. In this process, the role of the ergonomist is understanding the needs and features of user and turning this knowledge into principles and rules for devising so that designers can follow these rules as design criteria [4]. In order to achieve the environmental design tailored to the needs and characteristics of children and also to verify compliance of the current design of the playrooms of preschool centers with user-centered design principles, a proper tool should be used. One of the challenges ahead in achieving an environment appropriate to the characteristics of children is the lack of a suitable tool that can be used to determine the defects in the present design. Therefore, the purpose of conducting the study is to determine the basic parameters of designing an environment for children based on ergonomic evaluation with collaborative approach. Finally this study leads to compilation and establishment of a set of considerations which help designers and supervisors of preschool centers improve spaces for children.

2. Materials and Methods

This study is an analytical descriptive study. After developing the evaluation tool, to verify its validity, the content validity and face validity were used in this study . Its reliability was investigated using test-retest method.

Development of assessment tool

In the first stage, the initial parameters of the tool were determined by two ways; A: Study of resources were the core part of this research. First, a general search in the literature was done to get acquainted with resources, conducted studies, and the pioneering theories concerning children’s environments which included searching the websites such as PubMed, Elsevier, Google Scholar, Scopus, Google, Springer, SAGE, Wiley with the keywords of “children environment”, “child care center environment”, “preschool environment” (as well as other synonyms concerning children’s environment such as interior design, environmental quality, play area safety, child care center setting, noise, children injuries, child-friendly, play type) and search for resources in libraries, including student theses and existing books, which were relatively related to the children’s environment.

The resources obtained from the search were categorized and the most relevant sources were selected. Through a brief overview of these resources, general dimensions of the search were identified. Then, new texts were found by searching in the specified fields using relevant keywords. The search method for resources at this stage was in a way that in addition to the resources found in every field, we accessed other resources by searching for keywords and references mentioned in each text. The search by this method continued in every field until we found no new information. Since during collecting related resources, we were faced with high volume of information, we needed a note-taking system. For this purpose, two files were allocated for documents: resource information file and content file. In the resource information file, such information as author’s name, year of publication, magazine or websites where the source was taken from, and its general theme; and in the content file, an important summary and the source’s findings were recorded. Then by carefully studying the content file, the parameters related to design, the arrangement and playground environment of the playroom in the kindergarten were extracted. At this stage we tried not to miss any relevant data.

B: “Visiting the place” also carried out to compare the parameters extracted from the sources with the current conditions in the playroom of preschool centers in Iran. Since nearly all the sources studied were related to other countries, it was necessary to visit preschool centers to adapt and localize these parameters and further the study with a better insight.

The second stage is the collaborative phase of the project. In this level, the extracted items were first investigated and challenged through a focused group method in two sessions with professionals and staff of preschool centers [5-7]. At the first meeting, 12 teachers and supervisors were present in preschool centers in Tehran (Table 1). A meeting was also attended by 9 specialists in the fields of child development, psychology, environmental ergonomics, and designing (Table 2). Because of the nature of this tool, in selecting subjects, we tried to invite experts from different disciplines related to the topic for the meetings to ensure the comprehensiveness of judgments. At these meetings, items were first written on the board and the attendees were asked to write down their opinions about the importance of each proposed item. Then each item was read and the attendees mentioned their opinions on the item raised. With the permission of the participants, the meeting’s discussions were recorded to be used for checking items and compilation of tool.

After the meetings, audio files were transcribed and examined with facilitator notes during the meeting. Then at a meeting and in the presence of members of the research team, information from these meetings were concluded and final items of the evaluation tool were identified. Also, items were divided into 5 categories. Eventually, the output of these meetings was a tool whose validity and reliability was evaluated in the next steps.

Validity assessment

Content validity specifies that if the content of the questionnaire fit and relevant to the purpose of the study. In other words, this feature is investigated by experts in order to ensure that the content of the questions reflects a complete range of studied features [8-10]. In order to determine the content validity of the tool in this research, qualitative and quantitative content validity methods were used. Holcny describes content validity as a technique used for deduction and proceeds purposefully and systematically to identify the specific characteristics of a message [11]. To determine the content validity, the proposed methods of Chadwick et al. and Lawshe were used [12, 13]. Chadwick et al. suggest that content validity method is applicable when an information exchange tool (which contains relatively clear and inferential messages) is going to be introduced and applied in a practical way. Lawshe also believed that when high levels of the abstract and insight is needed for judgment and in the case when the scope of inference in the content and around a message is extensive, researchers should use content validity approach.

Lawshe devised a model for determining content validity in a way that the questionnaire is provided to the panel group, the role of which is to guide panel members, making it possible for members to judge accurately based on the necessity of the tool components. In addition, they will be asked to comment on each item on the judgment criteria given. Member responses are coded as follows; E: Essential, U: Useful but not essential, and N: Not necessary. In this study, Lawshe model was adopted. Since different perceptions are possible out of the judgment criteria, we decided to rate the judgment criteria in this tool as “completely relevant”, “relevant”, “relatively relevant”, and “unrelated”.

The response sequence in this scale, which is modeled on the Likert-type scale, is more evident. At this stage the panel members should be identified. Usually, members of a panel of validity evaluators should be formed of professionals in the field of the tool to provide correct and accurate judgments. Although the proposed method of Lawshe states the minimum number of members as 4, but it was decided to use as many members as possible in this study. Due to problems such as a small number of specialists in the field of child ergonomics and also multi-disciplinary content of the study, it was decided that at least 8 and up to 16 subjects participated in the process of validity assessment. At least 8 subjects were chosen because it is twice as the recommended number of Lawshe in order to reach the needed agreement and a validity coefficient of more than 60% with a higher level of confidence.

This is the amount accepted as the minimum coefficient of validity factor analysis by Chadwick et al. A maximum number of 16 subjects were selected because it is twice the minimum value and was considered to overcome problems such as failure to return the questionnaire. A total of 20 specialists were identified in the fields of ergonomics, psychology, educational sciences, and interior design. Then, 16 subjects agreed to participate in this study. Next, the proposed tool was sent by e-mail to them. Finally, 8 completed questionnaires were returned (Table 3) and delivered to researchers so the return rate was 50%. After carefully studying the tool, to evaluate the quality of the content, they were asked to provide their corrective views in detailed and written form. After collecting expert assessments and consulting with members of the research team, required changes were made to the tool.

In quantitative review of the content, we used the content validity ratio index to be ensured of selecting the most important and accurate content, and content validity index to be ensured that the tool items be well designed for content assessment. Panel member responses were quantified based on CVR equation.

CVR=(ne-n/2)/(n/2)

, where ne is the number of panel members who have identified that dimension or question as “necessary” (In this study, the sum of subjects which gave each item “completely relevant” and “relevant” scores), n is the total number of panel members, and CVR is the linear and direct conversion of panel members who have chosen the phrase “necessary”. The values assigned to CVR are as follows: 1) When less than half of the subjects choose “necessary” option, CVR is negative. 2) When half of the subjects choose “necessary” options and the other half choose other options, CVR is 0. 3) When all subjects choose the “necessary” option, CVR is 1. 4) When the number of subjects who choose the “necessary” option, include more than half but not all subjects, CVR is between 0% and 99%.

The impact score of items was also calculated. For this purpose, the defined 4-point criteria scales to evaluate content validity are weighted as follows:Unrelated: 0, relatively relevant: 1, relevant and completely relevant: 2 Then the impact scores are calculated using the formula:

Importance×Frequency (Percentage)=Impact score

An item is accepted if the CVR value is between 0% and 75% and the mean number of judgments is 1.5 or higher (Table 4). This value of CVR shows that more than half of the panel members selected “completely relevant” or “relevant” options (necessary in Lawshe scale). The mean value equal or higher than 1.5 indicates that the average judgments are closer to “completely relevant” and “relevant” options. On the other hand, the mean value equal or higher than 1.5 shows that the average judgments are equal to 75% of the maximum average of 2, which is higher than the minimum accepted value of 60% determined for reliability of validity.

After calculating CVR, to determine the comprehensiveness of judgments about validity of the tool, CVI index was calculated. CVI is the average CVR values of remaining items in the validated tool. The higher the content validity of the tool, the more CVI index closer to 0.99 and vice versa. The CVI is calculated using the following formula:

CVI=(∑CVR)/(retained numbers)

After measuring the content validity of the tool and omitting the inappropriate items, its face validity was examined. A tool has a formal validity when its articles or questions are apparently similar to the topic, which are prepared to measure it [8-10]. Here we used the opinions of 15 inspectors from the Northwest Health Center in Tehran to qualitatively evaluate face validity. Face validity was evaluated under three criteria of “clear and transparent”, “simplicity”, and “layout and style” for each of which 4-point Likert-type scale (including: totally agree, agree, disagree, and totally disagree) was determined. The inspectors were also asked to note their comments about items that they have chosen “disagree” or “totally disagree”. Then, items were modified in terms of wording and use of words through collecting comments.

Reliability test

Reliability is one of the properties of measuring instruments and determines how much the measurement tool yields the same results under the same conditions [8-10]. To calculate the reliability of measurement tool in this research, we used the method of test-retest and the Intra-class Correlation Coefficient (ICC). The playrooms of 30 kindergartens in the 2nd, 5th, and 6th district of Tehran were investigated by one of the researchers (P. F.). After a week, once again, the playrooms of these centers were evaluated and the correlation coefficient between the results of two evaluations by the compiled tool was calculated.

3. Results

After reviewing the sources, a list of extracted items was prepared (Table 5). This list was evaluated during the focus group meetings and the meeting with the research team and then, the initial version of the evaluation tool was prepared with 43 items. Its content validity was evaluated, the results of which are presented in Table 6. In the recent study, after assessing validity, 7 questions were omitted. The value of its content validity index was obtained using the following equation:

CVI=26.25/36=72.9

Therefore, an ergonomic evaluation tool for kindergarten playroom was designed; CVI value of which was obtained as 72.9%, which is acceptable.

Also, after evaluating the face validity of the tool, two minor modifications were made on the items as follows: 1) The maximum number of children at a playroom at the same time was less than 20; and 2) There are natural elements (e.g., pot, soil, wood, aquarium, etc.) in the playroom. In order to estimate reliability, the test-retest method and the ICC index were used. The value of this index was 0.99.

4. Discussion

Preschool centers play an important role in the lives of children. Design of preschool center classrooms should be influenced by the children’s characteristics. To create an environment that entails growth and development of children’s skills, considering physiological characteristics, psychological and anthropometric aspects of children in the selection of features, design, and layout of their respective environments is of special significance.

Children are interested in experiencing a wide range of activities; by dividing classroom space we can perform several different activities simultaneously in the class. This helps the child participate in activities according to the level of their abilities and interests and also monitor all their current activities in the class. As a result, the main advantages of this scheme would be promotion of the child independence, strengthening his/her power of choice, learning new skills, increasing self-esteem, and so on. [14].

Selection of appropriate color, material, and texture to cover the walls, floor, ceiling, and appliances in the classroom can help create a fun environment for children’s learning and assist the center as much as possible towards achieving educational goals. With proper application of color in the visual design, we can create visual attraction for children, which would increase interest and motivate the child to attend in these activities [15]. In regards that children in the early years of life gain experience through tactile stimulation, using a variety of materials with different textures helps the child in learning [15]. Various research studies show the impact of noise on the incidence of cognitive impairment as well as the decline in learning in children, therefore, taking measures to reduce classroom noise is mandatory when designing [16].

Also because of the importance of the quality of light and fresh air inside the class in the physical and psychological growth of children, when designing the center, we should pay special attention to the sunlight of the class and the center should be equipped with ventilation system in accordance with the standards [17].

Selection of appropriate color, material, and texture to cover the walls, floor, ceiling, and appliances in the classroom can help create a fun environment for children’s learning and assist the center as much as possible towards achieving educational goals. With proper application of color in the visual design, we can create visual attraction for children, which would increase interest and motivate the child to attend in these activities [15]. In regards that children in the early years of life gain experience through tactile stimulation, using a variety of materials with different textures helps the child in learning [15]. Various research studies show the impact of noise on the incidence of cognitive impairment as well as the decline in learning in children, therefore, taking measures to reduce classroom noise is mandatory when designing [16].

Also because of the importance of the quality of light and fresh air inside the class in the physical and psychological growth of children, when designing the center, we should pay special attention to the sunlight of the class and the center should be equipped with ventilation system in accordance with the standards [17].

In evaluating the reliability of this tool, due to the ease of visiting preschool centers in districts 2, 5, and 6 of Tehran Municipality, 30 centers of these areas were selected for measuring instrument reliability. One week after the first visit, these centers were visited for re-evaluation. When comparing first and second visit results, it was found that in all centers, the results of two visits are pretty much the same. Since the subject being evaluated

has been the space of these centers and the possibility of changing the elements of space (without intervention) within a week’s time, this result was expected.

The interaction of the child with the environment has a significant effect on his or her growth. As a result, the importance of research on the details of the environment, the effect of each one on the child, and finally collecting a set of considerations which assist designers and teachers in preschool centers and kindergartens to help improve children’s spaces, are clear. To evaluate the features of preschool centers, the appropriate tool should be used. According to the study results, the obtained assessment tool has a good efficiency to evaluate these environments.

Acknowledgements

This paper was extracted from the first author, Parisa Azizabadi Farahani, MSc. thesis, in the Department of Ergonomics, University of Social Welfare and Rehabilitation Sciences, Tehran.

Conflict of Interest

The authors declared no conflicts of interest.

References

[1]Shaw J. The building blocks of designing early childhood educational environments. Undergraduate Research Journal For The Human Sciences. 2010; 9(1).

[2]Olds AR. Child care design guide. New York: McGraw-Hill; 2000.

[3]Bainbridge WS. Berkshire encyclopedia of human-computer interaction. Massachusetts: Berkshire Publishing; 2004.

[4]Sanders EBN. From user-centered to participatory design approaches. Contemporary Trends Institute Series. 2002; 1-8. doi: 10.1201/9780203301302.ch1

[5]Goss JD, Leinbach TR. Focus groups as alternative research practice: Experience with transmigrants in Indonesia. Area. 1996; 28(2):115-23.

[6]Powell RA, Single HM. Focus groups. International Journal for Quality in Health Care. 1996; 8(5):499-504. doi: 10.1093/intqhc/8.5.499

[7]Hoppe MJ, Wells EA, Morrison DM, Gillmore MR, Wilsdon A. Using focus groups to discuss sensitive topics with children. Evaluation Review. 1995; 19(1):102-14. doi: 10.1177/0193841x9501900105

[8]Parsian N, AM TD. Developing and validating a questionnaire to measure spirituality: A psychometric process. Canadian Center of Science and Education. 2009; 1(1):2-11. doi: 10.5539/gjhs.v1n1p2

[9]Brancato G, Macchia S, Murgia M, Signore M, Simeoni G, Blanke K, et al. Handbook of recommended practices for questionnaire development and testing in the European statistical system. Luxembourg: Eurostat; 2006.

[10]Del Greco L, Walop W, McCarthy RH. Questionnaire development: 2. Validity and reliability. Canadian Medical Association Journal. 1987; 136(7):699-700. PMCID: PMC1491926

[11]Cavanagh S. Content analysis: Concepts, methods and applications. Nurse researcher. 1997; 4(3):5-13. doi: 10.7748/nr1997.04.4.3.5.c5869

[12]Chadwick BA, Bahr H, Albrecht S. Social science research methods. Englewood Cliffs: Prentice-Hall; 1984.

[13]Lawshe CH. A quantitative approach to content validity1. Personnel psychology. 1975; 28(4):563-75. doi: 10.1111/j.1744-6570.1975.tb01393.x

[14]GSA G. Child care center design guide. New York: Public Buildings Service Office of Child Care; 2003.

[15]Ata S, Deniz A, Akman B. The physical environment factors in preschools in terms of environmental psychology: A review. Procedia-Social and Behavioral Sciences. 2012; 46:2034-9. doi: 10.1016/j.sbspro.2012.05.424

[16]Linting M, Groeneveld MG, Vermeer HJ, van IJzendoorn MH. Threshold for noise in daycare: Noise level and noise variability are associated with child wellbeing in home-based childcare. Early Childhood Research Quarterly. 2013; 28(4):960-71. doi: 10.1016/j.ecresq.2013.03.005

[17]Zuraimi M, Tham K. Indoor air quality and its determinants in tropical child care centers. Atmospheric Environment. 2008; 42(9):2225-39. doi: 10.1016/j.atmosenv.2007.11.041

The interaction of the child with the environment has a significant effect on his or her growth. As a result, the importance of research on the details of the environment, the effect of each one on the child, and finally collecting a set of considerations which assist designers and teachers in preschool centers and kindergartens to help improve children’s spaces, are clear. To evaluate the features of preschool centers, the appropriate tool should be used. According to the study results, the obtained assessment tool has a good efficiency to evaluate these environments.

Acknowledgements

This paper was extracted from the first author, Parisa Azizabadi Farahani, MSc. thesis, in the Department of Ergonomics, University of Social Welfare and Rehabilitation Sciences, Tehran.

Conflict of Interest

The authors declared no conflicts of interest.

References

[1]Shaw J. The building blocks of designing early childhood educational environments. Undergraduate Research Journal For The Human Sciences. 2010; 9(1).

[2]Olds AR. Child care design guide. New York: McGraw-Hill; 2000.

[3]Bainbridge WS. Berkshire encyclopedia of human-computer interaction. Massachusetts: Berkshire Publishing; 2004.

[4]Sanders EBN. From user-centered to participatory design approaches. Contemporary Trends Institute Series. 2002; 1-8. doi: 10.1201/9780203301302.ch1

[5]Goss JD, Leinbach TR. Focus groups as alternative research practice: Experience with transmigrants in Indonesia. Area. 1996; 28(2):115-23.

[6]Powell RA, Single HM. Focus groups. International Journal for Quality in Health Care. 1996; 8(5):499-504. doi: 10.1093/intqhc/8.5.499

[7]Hoppe MJ, Wells EA, Morrison DM, Gillmore MR, Wilsdon A. Using focus groups to discuss sensitive topics with children. Evaluation Review. 1995; 19(1):102-14. doi: 10.1177/0193841x9501900105

[8]Parsian N, AM TD. Developing and validating a questionnaire to measure spirituality: A psychometric process. Canadian Center of Science and Education. 2009; 1(1):2-11. doi: 10.5539/gjhs.v1n1p2

[9]Brancato G, Macchia S, Murgia M, Signore M, Simeoni G, Blanke K, et al. Handbook of recommended practices for questionnaire development and testing in the European statistical system. Luxembourg: Eurostat; 2006.

[10]Del Greco L, Walop W, McCarthy RH. Questionnaire development: 2. Validity and reliability. Canadian Medical Association Journal. 1987; 136(7):699-700. PMCID: PMC1491926

[11]Cavanagh S. Content analysis: Concepts, methods and applications. Nurse researcher. 1997; 4(3):5-13. doi: 10.7748/nr1997.04.4.3.5.c5869

[12]Chadwick BA, Bahr H, Albrecht S. Social science research methods. Englewood Cliffs: Prentice-Hall; 1984.

[13]Lawshe CH. A quantitative approach to content validity1. Personnel psychology. 1975; 28(4):563-75. doi: 10.1111/j.1744-6570.1975.tb01393.x

[14]GSA G. Child care center design guide. New York: Public Buildings Service Office of Child Care; 2003.

[15]Ata S, Deniz A, Akman B. The physical environment factors in preschools in terms of environmental psychology: A review. Procedia-Social and Behavioral Sciences. 2012; 46:2034-9. doi: 10.1016/j.sbspro.2012.05.424

[16]Linting M, Groeneveld MG, Vermeer HJ, van IJzendoorn MH. Threshold for noise in daycare: Noise level and noise variability are associated with child wellbeing in home-based childcare. Early Childhood Research Quarterly. 2013; 28(4):960-71. doi: 10.1016/j.ecresq.2013.03.005

[17]Zuraimi M, Tham K. Indoor air quality and its determinants in tropical child care centers. Atmospheric Environment. 2008; 42(9):2225-39. doi: 10.1016/j.atmosenv.2007.11.041

Type of Study: Research |

Subject:

Sport injury and corrective exercises

Received: 2016/09/17 | Accepted: 2017/04/15 | Published: 2017/08/6

Received: 2016/09/17 | Accepted: 2017/04/15 | Published: 2017/08/6

Send email to the article author

| Rights and permissions | |

|

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License. |